Backing up important data and memories is an important task that should not be neglected. Just as important as performing Linux backups is verifying that the backups made are good and can be used to recover from data disaster. The principle technologies typically considered for verifiable backups are Cloud Backups and saving the data to a system using ZFS. Apple’s Time Machine is not mentioned since it has no mechanism to detect data degradation and the database that it saves data to can itself become corrupt.

Cloud Backup

Cloud Backup services like Dropbox or Crashplan offer a very compelling price point with the promise that uploaded data is safe and that it is protected from corruption. As some customers have discovered, that is not true. Although these cases of cloud corruption are few and far between, the consequences for putting the responsibility of managing and protecting important data into someone else’s hands can be quite severe (total loss of backup).

ZFS Filesystem

The ZFS filesystem is a filesystem for Solaris, FreeBSD and Linux that protects against data corruption and can repair it with little user intervention. ZFS allows the user to perform a ZFS Send operation to backup a copy of the pool to an external backup, however it provides no mechanism to protect that backup from data degradation if it is not stored on a ZFS partition.

Linux Backup System utilizing rsync, rsnapshot, dar, and par2

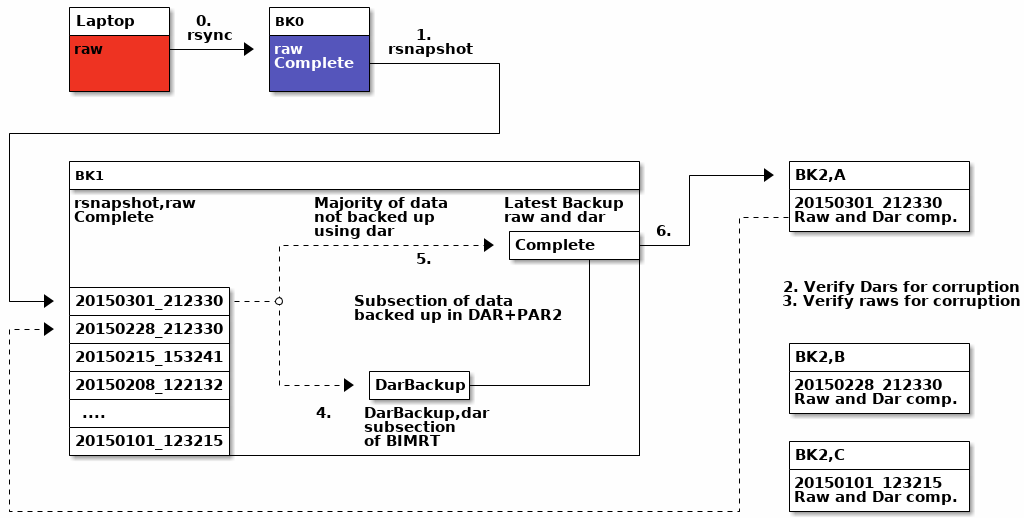

I have combined several backup technologies to create a backup system that allows the creation of snapshots of important data and to save each snapshot to a series of external hard drives that can be distributed in different geographical regions, each drive containing a complete copy of the data.

Overall Linux Backup System Design

The backup system was designed to provide backup redundancy and data degradation detection and recovery for every backup phase. Important data is stored in Disk Archive (DAR) with parity files that can correct up to 10 percent of corruption. Less important data not stored in disk archives are compared against the snapshot that it was created with, which validates the backup and the particular snapshot that backup was created with. Automatic archive testing and recovery is integrated into the system to provide verifiable and recoverable backups that protect against data degradation and can repair it if it occurs.

This figure shows all parts of the backup system. Each external drive is denoted by the different backup phases as BK0, BK1, and BK2,N. Since the laptop doesn’t have enough storage to store all of the data, it performs a partial backup to the first backup drive, BK0. BK0 is the first drive that contains the entire backup in a raw, uncompressed form.

Phase 0: Laptop to BK0 (first full backup)

This phase is meant to be a regular backup that occurs daily. The script runs on a specific list of folders, performing an rsync command on each with the archive and checksum feature enabled. For all of the backup scripts implemented, the error flag $? is checked after each command is executed and the script aborts if an error occurs. This important detail will enable the quick detection of failures in the backup system.

Phase 1: Snapshot of BK0 to BK1

A weekly or semi-regular snapshot of the state of BK0 is taken using the rsnapshot utility. The checksum flag is provided in the rsnapshot.conf so that the complete checksum is performed on each file before determining if that file should be transferred. By performing the checksum before transferring files, subtle corruption in BK1 is detected and each corrupt file is corrected. Rsnapshot uses a feature that Unix systems support called hard links. Hard links allow for a user to create a link between two different file names, such that each filename refers independently to a single file. This is very useful when one wishes to create multiple backups in which only a few files change. Using hard links means that the backup drive size only increases by the amount of changed files added to it. Using rsnapshot, a snapshot in time can be created to provide a fixed reference to compare BK2,N storage devices to.

Since describing the details of this Linux backup system is quite involved, we will continue investigating this system in subsequent blog posts. I’ll continue to update this post with links to the additional posts as they are published. Look for an associated github link for the required scripts to be published in the final blog post.

Continue reading: Multi-Tiered Linux Backup Systems – Part II (Coming Soon)